World models are a fundamental component in model-based

reinforcement learning (MBRL). To perform temporally extended

and consistent simulations of the future in partially observable

environments, world models need to possess long-term memory.

However, state-of-the-art MBRL agents, such as Dreamer,

predominantly employ recurrent neural networks (RNNs) as their

world model backbone, which have limited memory capacity. In

this paper, we seek to explore alternative world model backbones

for improving long-term memory. In particular, we investigate

the effectiveness of Transformers and Structured State Space

Sequence (S4) models, motivated by their remarkable ability to

capture long-range dependencies in low-dimensional sequences and

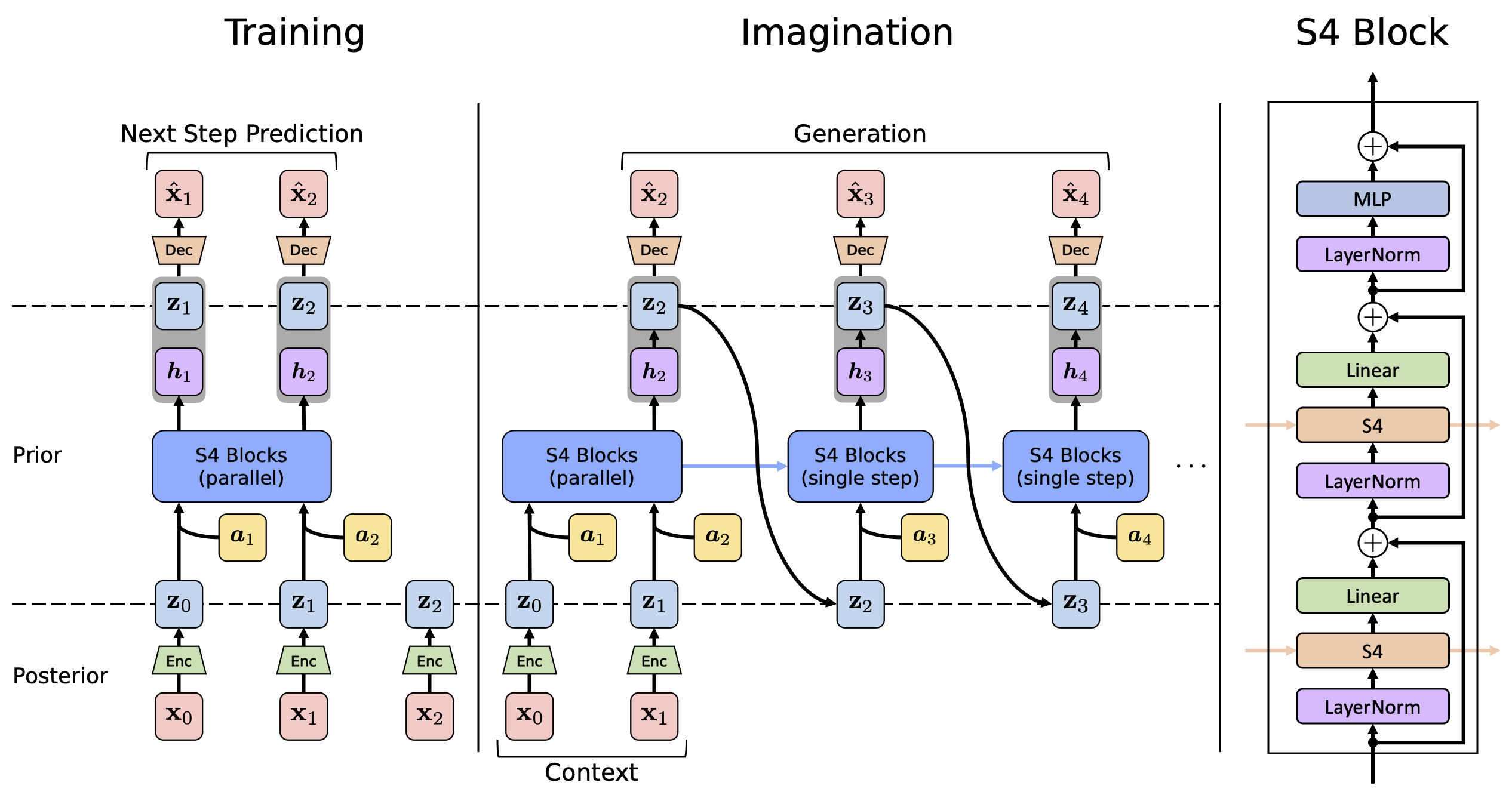

their complementary strengths. We propose S4WM, the first world

model compatible with parallelizable SSMs including S4 and its

variants. By incorporating latent variable modeling, S4WM can

efficiently generate high-dimensional image sequences through

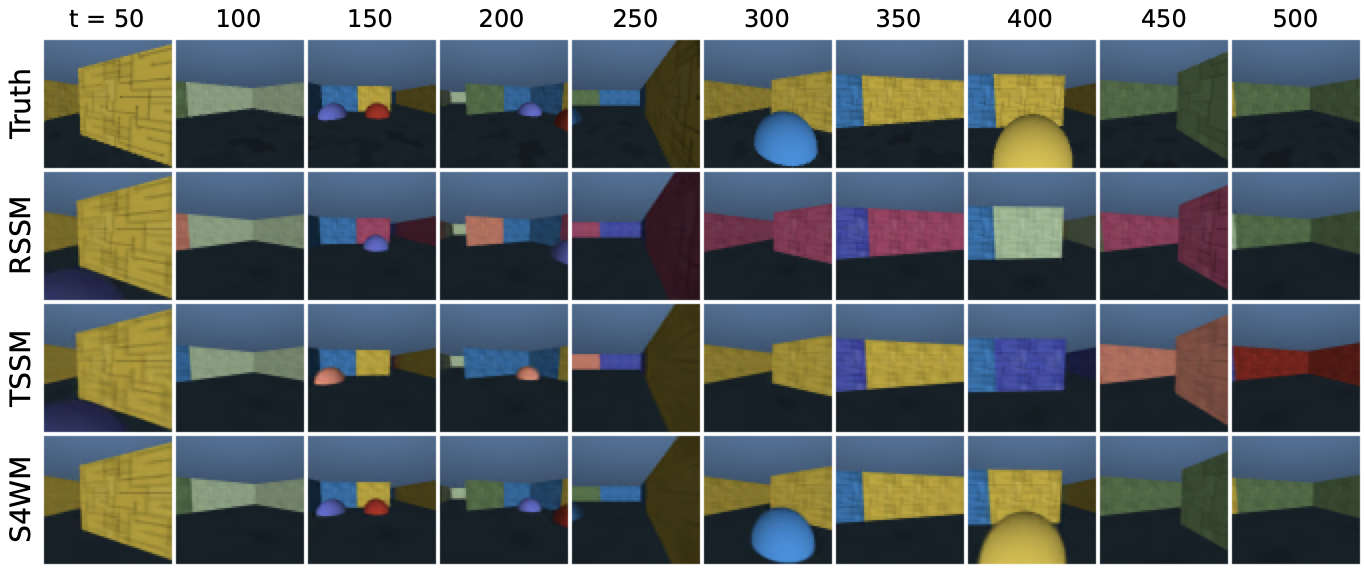

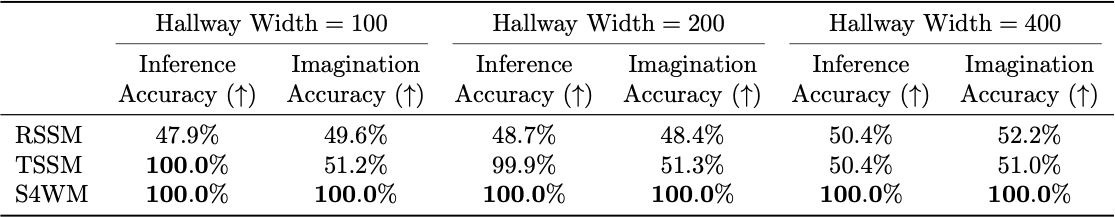

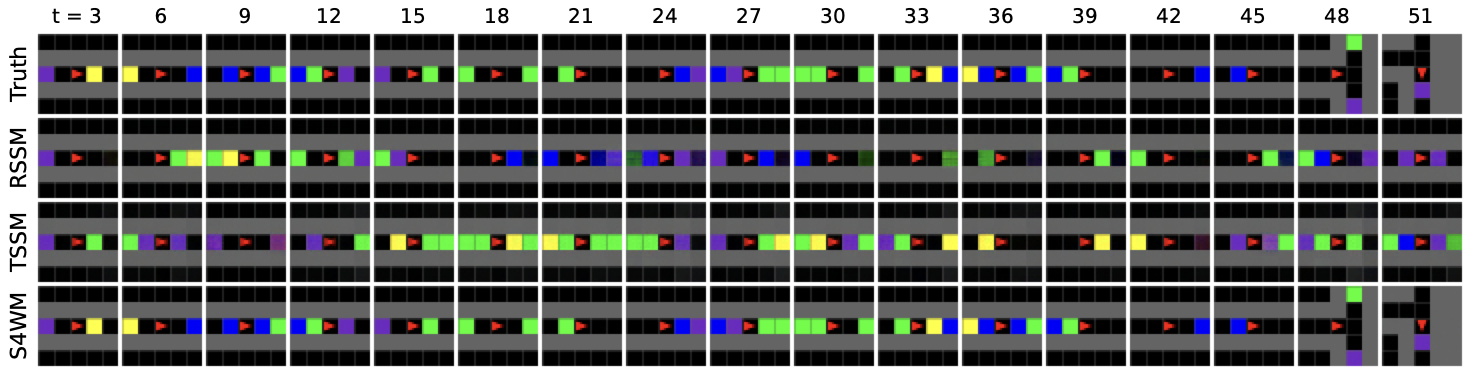

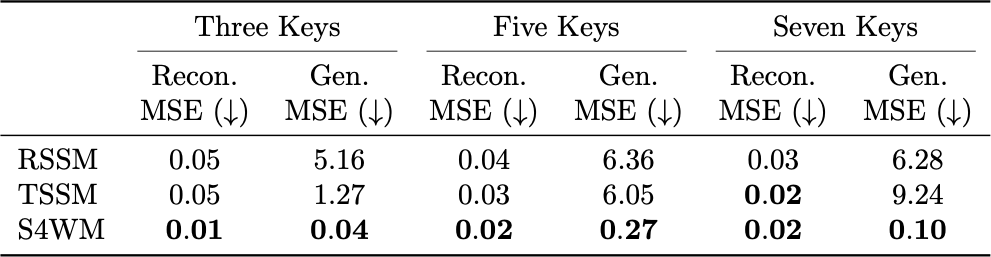

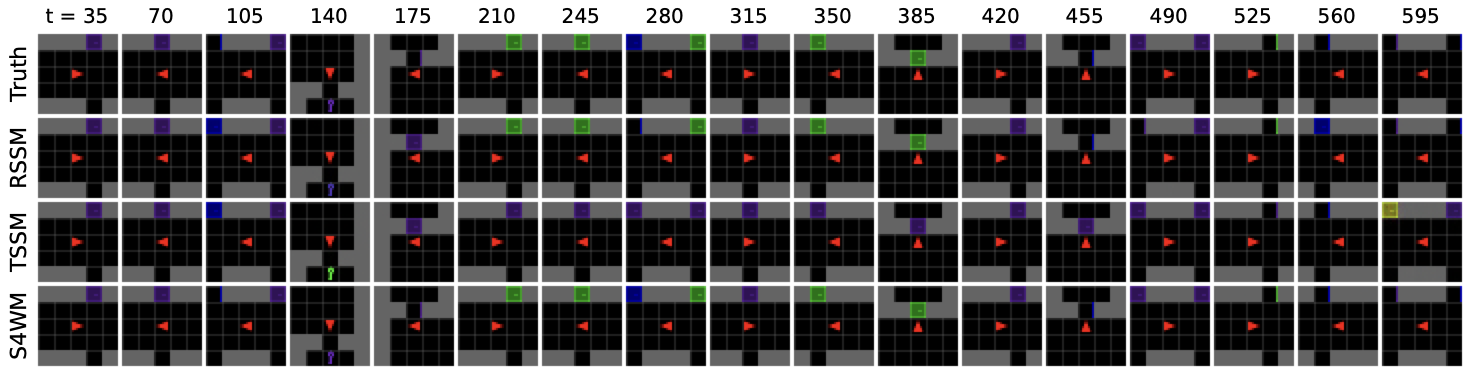

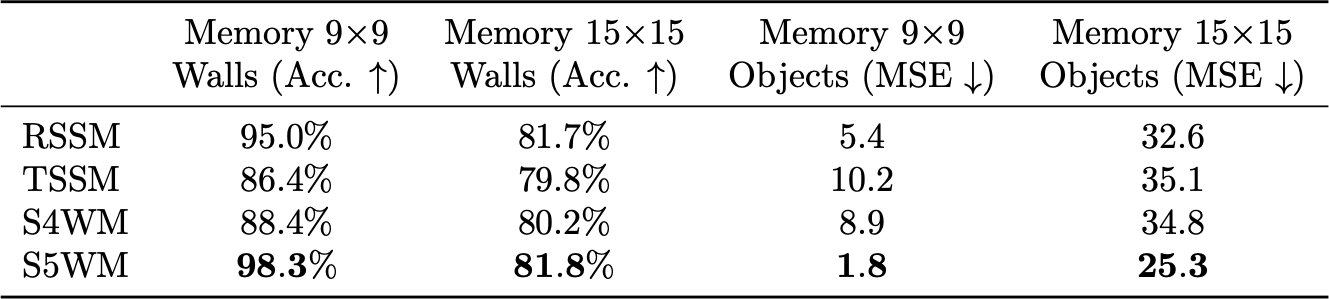

latent imagination. Furthermore, we extensively compare RNN-,

Transformer-, and S4-based world models across four sets of

environments, which we have tailored to assess crucial memory

capabilities of world models, including long-term imagination,

context-dependent recall, reward prediction, and memory-based

reasoning. Our findings demonstrate that S4WM outperforms

Transformer-based world models in terms of long-term memory,

while exhibiting greater efficiency during training and

imagination. These results pave the way for the development of

stronger MBRL agents.